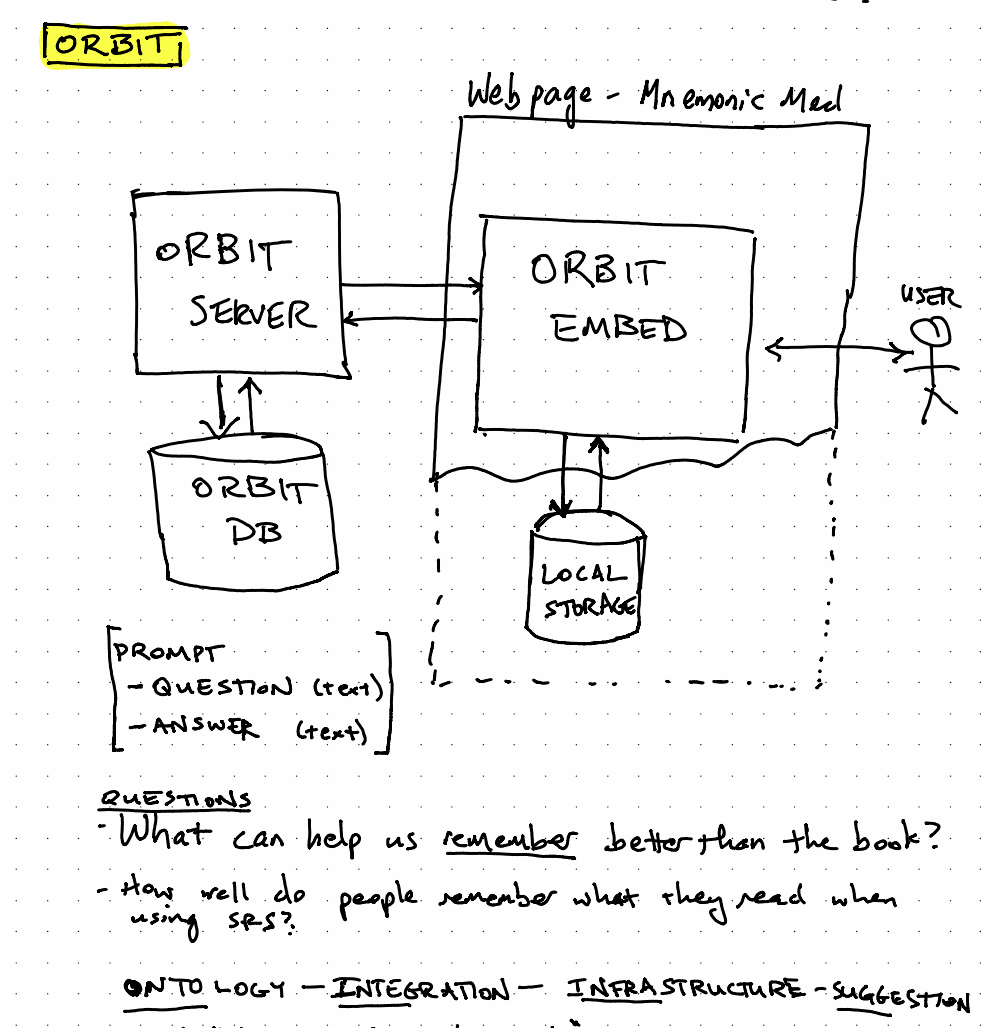

Orbit

Architecture

Experience Notes - 2021-06-04

I've been looking into the problem of how to sync SRS prompts from note-taking systems with Orbit.

The goal is to have a CLI tool that:

- Takes as input an arbitrary set of notes, most likely a folder full of markdown-formatted text files

- Parses those notes for cloze deletion and Q and A style prompts

- Syncs those prompts to Orbit

The note-sync library already accomplishes most of this, but it has a few shortcomings:

- It depends on a larger set of libraries that Andy wrote to solve a more general problem (

computer-supported-thinking,spaced-everything, andincremental-thinking), which makes the code more complex than necessary. - Markdown files are expected to have a certain format consistent with being exported from Bear.

- Prompts are being cached locally, which feels unnecessary, both from the perspective of performance and code complexity. (see note below about necessity of caching)

After spending a few hours reviewing the code and testing out the note-sync package, I have a few thoughts/opinions:

- It seems like it would be simpler to do a complete re-write. The one exception is the parsing code in

incremental-thinkingthat parsesqaPromptsandclozePrompts. That code is well-tested and has been in use by Andy for a while. - I don't think this package should support syncing prompts from markdown notes -> Anki. Given this package's scope is Orbit, I think it should focus on sync with Orbit only. Maybe Anki and Orbit should be able to sync with one another, so that Anki could be used as a review interface? If so, that feels like a separate concern. It looks like there is a package called

anki-importthat at least handles one-way importing. - I don't think this package should do any local caching. Based on the discussion of Idempotency and Identity, we should be able to simply compare hashes of the prompts to know whether a prompt is new or not. We could debate whether the Orbit API should allow a duplicate prompt to be created, but at worst, we only need to grab the hashes for all existing prompts from the Orbit API and then do some hash comparisons. I admit that I don't fully understand the caching code yet, so maybe I'm missing something!

And here are a few questions:

Provenance

How should this library handle provenance? Broadly, I have questions about how Orbit thinks about provenance, but scoping my questions to this library, it appears that the current implementation is caching provenance information locally, but not syncing that provenance information to the Orbit API.

The current implementation depends on a Bear Note ID at the bottom of the markdown file to determine provenance, which is obviously undesirable as notes could be exported from a variety of different note-taking systems.

If we'd like to track provenance, we could use the note's filename and modified date to populate the provenance data Orbit requires. Here's the PromptProvenanceType filled out:

{

externalID: hash(note.filename),

title: note.filename,

modificationTimestampMillis: note.lastModified

}

One gotcha with provenance is based on the way we're handling Idempotency, moving a prompt from one file to another, so long as the prompt didn't change at all, would change the provenance information but not the identity of the prompt itself. That's probably desirable for the prompt, but the provenance information has changed. We'll need to account for that.

Again, there's a general question of whether we need to track provenance at all for this importer.

Annotations

I've been thinking more generally about what this library accomplishes and how

How It Should Work

- Iterate over a set of plaintext files (do they need to be md?)

- Parse each file for:

- {} - paragraphs containing cloze deletions

- Q: and A: blocks

- For each SRS prompt, create a task in Orbit

- Do not overwrite existing tasks that have the same identity

- Hash the prompt, check the hash of prompts already in Orbit (should client do this? should orbit do this?)

- Do not overwrite existing tasks that have the same identity

- Upload the task to Orbit

FromtaskCache.ts

- Parse each file for:

const prompts = flat(updateLists.map((update) => update.prompts));

await apiClient.storeTaskData(

await Promise.all(

prompts.map(async (prompt) => ({

id: await getIDForPrompt(prompt),

data: prompt,

})),

),

);

Notes on markdown parsing

Parsing:

remark-parseis being used to parse the markdown file.- It's loaded in

incremental-thinkinginprocessor.tsalong with a host of plugins

- It's loaded in

QA/Cloze Prompts:

- Both are written as plugins

Notes on Setup

- How to get started wasn't obvious from the Readme. Found the

yarn installembedded in a paragraph of text. - note-sync (

yarn sync)- Relax the constraint of reading Markdown files exported from Bear

- Should note-sync only work with Orbit? Should it also work with Anki?

- Having a Markdown->Anki export (that doesn't go to Orbit) feels weird.

- Having Anki and Orbit stay in sync, maybe that makes sense!

- In any case, Anki support feels like a different "package" to me.

- Should note-sync really do any caching? Is it so slow reading from disk?

- What is being cached exactly?

- Remote Prompt States

- How is it invalidated?

- What is being cached exactly?

- I don't understand the data model - would be helpful to have it documented somewhere.

- What are paths?

- Does it make sense to assign all tasks from the same note to a path?

- Also, it appears that Orbit knows nothing about tasks.

- Why do we need an external note ID?

- When editing a paragraph that contains a Cloze Deletion, any change to the text of the paragraph will result in the creation of a new Orbit Task

- Parsing the markdown files for prompts, we should use Andy's code from

incremental-thinkingfor parsingqaPromptsandclozePrompts.- It's already tested and Andy has been using it the last year or so.

- How can I get to a "review" interface?