Towards a Unified Schema for Software

Schema facilitate understanding in communication

Schemas exist to facilitate communication and aid comprehension. It's a way of defining expectations for what kinds of content (below I will refer to them as "types") will be sent or received and to help with the interpretation of the message.

Schemas exist in human life wherever communication takes place. Schemas even existed before writing. Even when communication is oral, there are certain expectations about the "type" of response one might give in response to a question. Consider the following exchange:

- Speaker A: How many sheep do you have?

- Speaker B: Sunshine.

- Speaker A: What?? I was asking for a number.

- Speaker B: Sorry, when you asked about my sheep I thought of my favorite sheep, Sunshine. I have twelve.

Indeed, humor often leverages a "schema violation" to surprise the hearer as the core of the joke. See Abbot and Costello's famous "Who's on first?" sketch.

Written communication makes schema even more important since the reading is done asynchronously

After the invention of writing, schema became even more important because the reader would be consuming the written content asynchronously. The "writer" wouldn't necessarily be around to clarify the statement in the case of schema violation.

Over time, we developed conventions about how to specify the "type" that a writer was using when they wrote down a message. Tables or spreadsheets are one such convention. Each column has a specific "type" to it.

(picture of a ledger)

Consider the following:

(picture of a check with the incorrect "type" in all of the fields)

When the bank receives the above check, they will be unable to deposit it. It fails "schema validation." It's not about whether a specific account has the correct amount of money in it. Rather, the message is nonsensical because the types of the fields do not conform to an expected schema.

Schemas consist of types

What are these "kinds" of data, anyways? In computing, we often refer to them as "types." There are only a few "basic types":

- Texts (characters)

- Numbers (digits, numerals, integers, decimals)

- Date & Time (one could argue that datetime is a higher-level type composed of the basic type number, and certainly some computer languages represent them this way: see UNIX timestamps)

- Booleans (yes/no, true/false, perhaps also a special case of the text type)

Looking carefully at the basic types, we discover things are much more interesting. Text can describe a name of a city or a person, a brand of an automobile, a type of cloud, a command, so many things.

We build up a set of complex types from these basic building blocks: cities and states, company names, categories of things, even parts of speech. Though we most often use these basic types in software to define data, the interesting part of schema consists of these higher-level types.

So, a schema is simply defining the type of response that's expected when communicating a message to someone, whether orally or in writing.

Schemas in Software Systems

Schemas are everywhere in computing systems. Schemas are the types in our programming languages that allow us to communicate with the compiler and with other software developers. Schemas are the description of our databases that describe how data is represented. Schemas are the public fields of a class that explain what functions and the types that are expected by that function. Schemas are our REST APIs, our GraphQL APIs, our tRPC. Any process-to-process communication, passing data back and forth, communicating between different computing systems, those are all described by a variety of different schema languages. Even event systems within a single program leverage schemas.

Our user interfaces are also rich in schema. Think about forms or any data we present on the screen. It has certain schemas we expect or accept when it comes to input. It has certain schemas that we expect for output. Just look at a tweet and break down the different elements of a tweet.

- the message itself

- the time at which it was sent

- the location from which it was sent

- the person who sent it, their username and profile image

- a whole variety of other fields that have types on schemas.

Schemas are everywhere in software, and we have a variety of ways of making explicit the types of things.

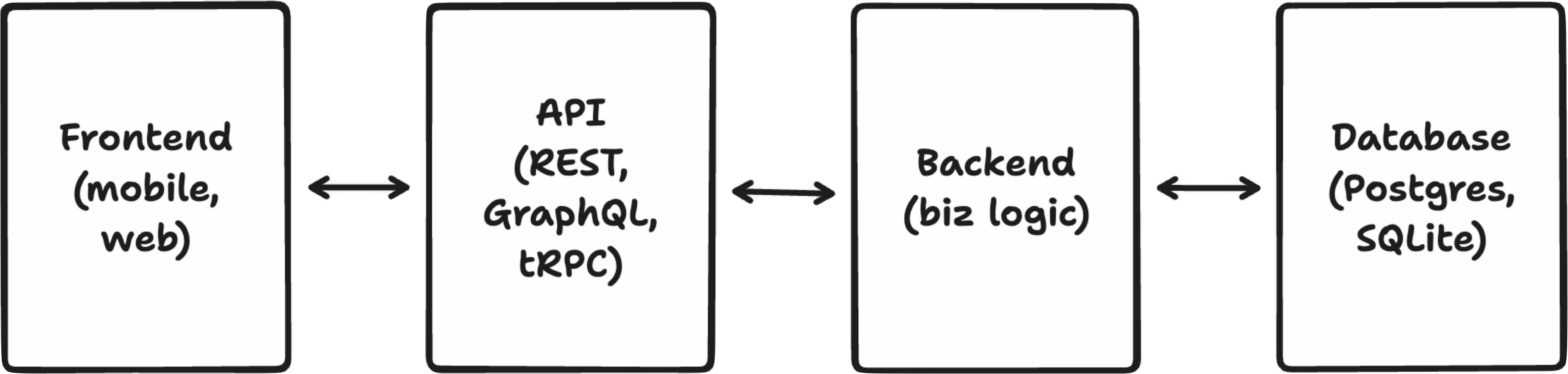

Schema in client-server architecture

We've established that schemas are everywhere in software. Let's focus on schemas in one specific domain: web and mobile applications.

The default architecture for web and mobile systems today is client-server, sometimes called a layered or "n-tier" architecture.

With a client-server architecture we disperse the schema across the system in order to avoid over-coupling and enable the independent systems to develop more quickly.

- DATABASE: Schemas in the database are often defined in SQL itself (think of pgdump)

- BACKEND: Schemas may be defined again in the ORM layer

- validations, for example, things that add additional information about the "type" of a thing that can't be represented by a simple SQL value - where should those live?

- validations are part of your schema! they say what the data should represent

- API: an API specification is mostly about schema, actually. Often APIs are kind of like a schema translation layer for remote clients to be able to talk to the backend.

- We often think of it as good to avoid over-coupling of clients to the database, allowing each to iterate independently.

- FRONTEND: TypeScript types are schemas! They help us pave over the horribleness of JavaScript. You all know that... but that's another schema

- Also, state management in the frontend may store the data again with another schema.

- Also, state management in the frontend may store the data again with another schema.

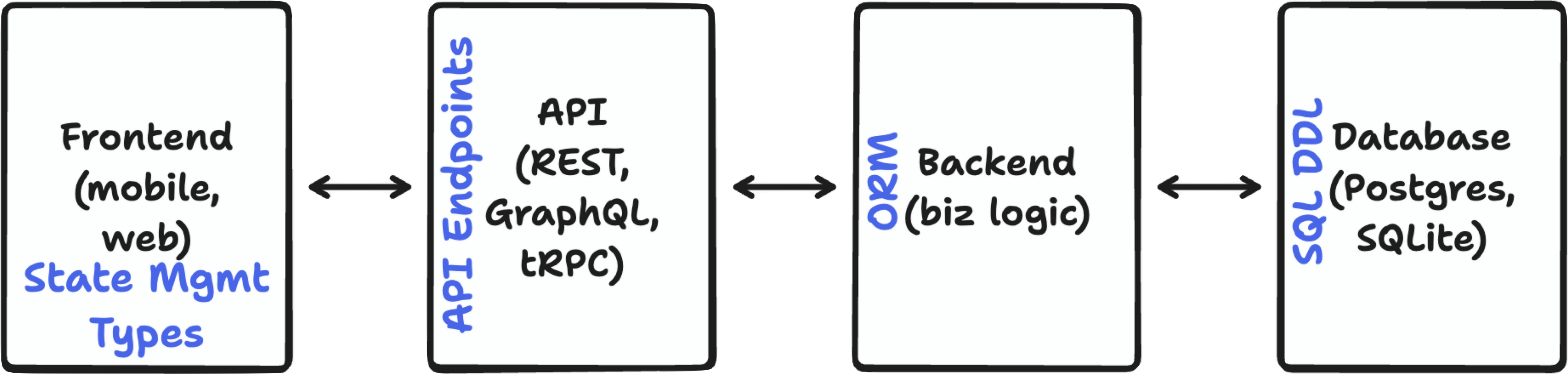

This results in systems that are difficult to change and reason about. Consider a small change to an app and all the places you'd have to make that change

- front-end app - add a single field

- add a client-side validation

- change the API endpoint

- update the backend types

- maybe the validation isn't supported by the DB

- add the column to the database itself

- a single change cascades throughout the system, requiring many changes

Approaches to compressing schema in client-server architecture

There have been some efforts toward streamlining the schema in client-server systems. One is Prisma. Prisma ...

Rails

RedwoodJS

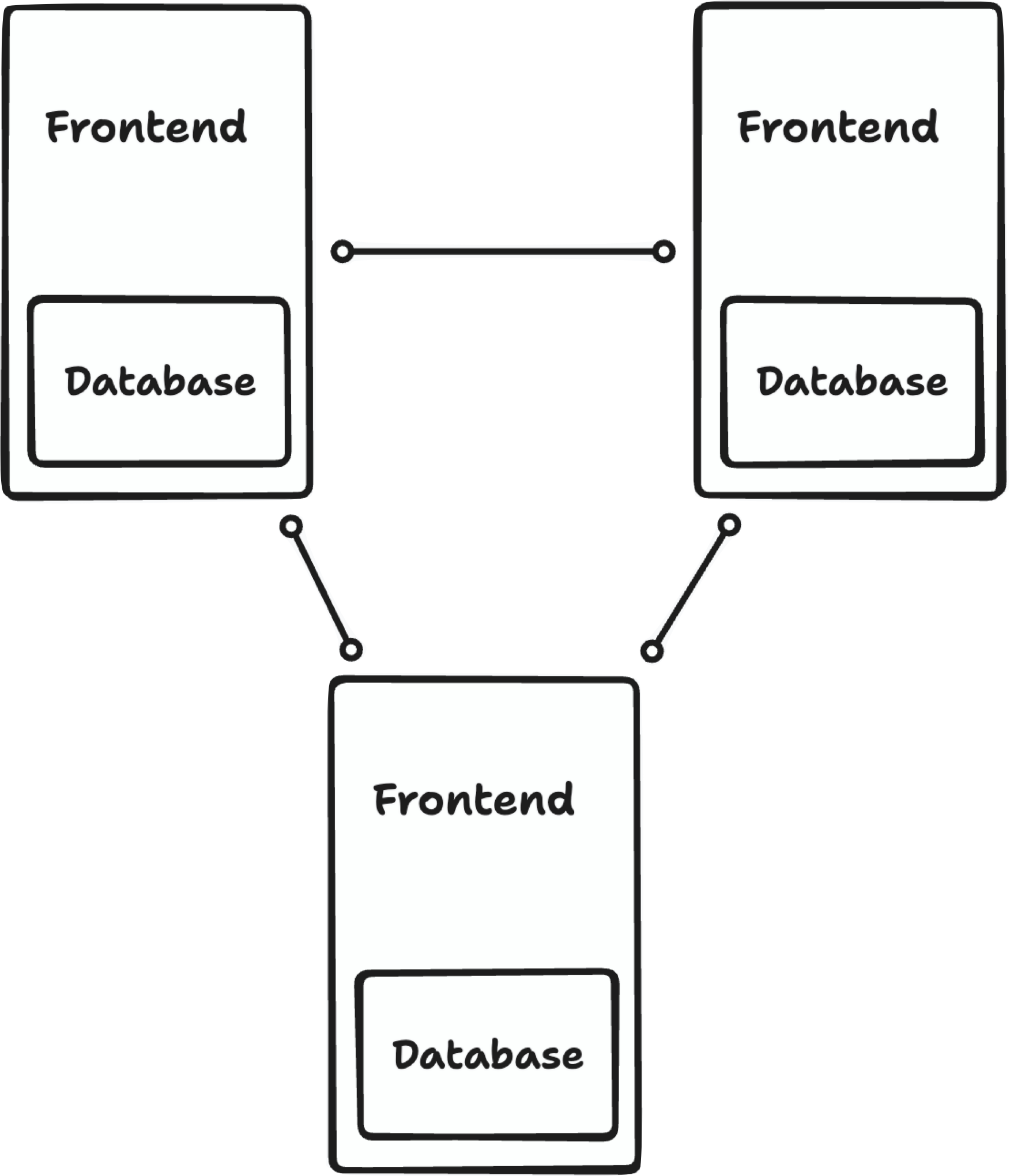

Schemas in local-first architecture

Local-first software is a set of ideals and does not prescribe a specific architecture to meet those ideals. However, the ideals imply a few characteristics of the architecture that are trending towards a common pattern. For the purposes of this article, we'll focus on one aspect of that pattern: every client application has a local copy of the data for that application on the device and that local data store gets synchronized with the data stores on other devices. The synchronization of the data store happens either via peer-to-peer networking or via a sync server.

In this architecture, the n-tier architecture of client-server has been flattened such that there are only clients that have both the front-end code, the data store, and the "ORM" all on a single system.

We still need schema in a local-first architecture.

Any place communication happens between two systems, there is schema involved. Would be ideal if it’s the same schema on both sides.

Schema serves several purposes:

- The shape of the data at rest and in memory

- Validating data conforms to the schema to ensure the integrity of data in the data store

- Types to enable compile-time type checking and improve the developer experience

- Serialize and distribute the schema to other systems

How do we bridge from "local-first apps need schema" to "the magic scenario"?

- shape of data

- at rest

- in memory

- ensure integrity of the data when moving between memory and solid state storage

- types

- UI

- validate user entry of the data

- generate the UI itself based on the schema

- APIs for connecting external systems - encode/decode/validate

- API docs

- serialize the data over the wire for network communication?

- in-app schema awareness

The Magic Scenario

A version of Composer that's JUST ECHO and Shell for connecting devices and inviting others and dynamic plugins.

- Ask Composer to construct and modify arbitrary UIs to let you interact with the underlying data.

- Sketch example plugins with TLDraw and have Composer generate them and them to Composer automatically.

- Throw away the UIs if you don't like them.

- Attach the UI to the object if it's useful.

- Generic data browser for browsing data in the system.

- APIs for external integration are created automatically based on the data in the system.

What if React is the last web framework?

An example: unified schema in DXOS with Effect Schema

We have implemented a local-first architecture in DXOS with a unified schema using the Effect Schema library. Below we describe how we implemented it and describe some of the benefits and challenges we have experienced.

Defining a schema

The primary role of schema is to define the shape of data.

import * as S from "@effect/schema/Schema";

import * as E from "@dxos/echo-schema";

export const Contact = S.struct({

name: S.string,

email: S.string,

emoji: S.string,

color: S.string,

}).pipe(

// Give the schema a unique name and version

E.echoObject("dxos.types.contact", "0.1.0")

);

Inferring types from the schema

// E.Schema adds ECHO-specific fields to the type

export type Contact = E.Schema.To<typeof Contact>;

Code like the following will now throw compile-time type-check errors:

const contact: Contact = {

name: 1234,

}

Instantiating, mutating, and replicating an object

// Create a contact object and persist in ECHO

const contact = space.db.add(Contact, {

name: "John Doe",

email: "john@doe.org",

color: "#FF0000",

emoji: "👍",

});

// Directly mutate, triggering synchronization with other devices

contact.email = "johndoe@altavista.com";

Reactive objects update automatically when modified locally or by other devices

// ContactCard re-renders when contact is changed

const ContactCard = ({ contact }) =>

<span>{contact.name}</span>;

Validations

const EMAIL_REGEX = /^[a-zA-Z0-9._-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,4}$/;

export const Contact = S.struct({

// ... omitted

email: S.string.pipe(S.pattern(EMAIL_REGEX)),

// ... omitted

);

Dynamic errors from schema

// 🔥 throws a ParseError

contact.email = "NOT_AN_EMAIL";

/* The @effect/schema ParseError

Parsing failed:

{ email: "NOT_AN_EMAIL" }

└─ ["email"]

└─ does not match pattern

*/

These parse errors can be used to generate human-readable error messages for user interfaces and API integrations.

Schema serialization and discovery

We serialize the schema to JSON Schema and save to the data store. This enables any reader of the data store to inspect the shape of the data and maintain schema integrity through the validations.

Interesting Schema-enabled Scenarios

When you have a single unified schema across the entire architecture, new scenarios are enabled.

Generate UI from the schema (tables)

Modify schema on the fly (tables)

Cross-app interop with runtime schema discovery

By serializing the schema to the database, we enable cross-app interop scenarios where two different applications can read/write from the same data store simultaneously while maintaining schema integrity.

Inter-app interop via drag-and-drop

Composer's drag-and-drop functionality is schema-aware and uses an inversion of control model to enable plugins to expose functionality about themselves to other plugins.

Schema-shaped responses from LLMs

We can write LLM prompts that request responses in the shape of the schema. For example:

prompt coming soon

Future Research

Schema migration

If you have any experience with schemas in software systems, you have probably been wondering how we handle schema change. The short answer is we do not handle this case yet. At this time, we do not do automatic data migrations based on changes to the schema. We see the above work as creating a foundation on which we can build a robust schema migration system.

Schema serialization with arbitrary code exectuion

As mentioned above, we currently serialize schemas to JSON Schema and store them in the data store. However, JSON Schema does not serialize filters (Effect Schema validations) that contain arbitrary code, so some schema validity will not be maintained across systems. We intend to investigate distributing schemas as JavaScript packages and dynamically loading those packages from a schema repository at runtime, enabling arbitrary code to be serialized as well.

Outline

- What is a Schema and Why do we have them?

- Schema facilitate understanding in communication

- Implicit schema exists even in oral communication

- Who's on First

- Written communication makes schema even more important since the reading is done asynchronously

- Implicit schema exists even in oral communication

- Schemas consist of types

- Schema is simply defining the type of response that's expected

- Schema facilitate understanding in communication

- Schema in Software

- Schema, schema, everywhere

- Schema in traditional client-server applications

- Many different schemas, difficult to align

- Schemas in a local-first architecture

- Local-first is a set of ideals, but a general architecture fulfills the ideals

- Move the database onto the client

- Synchronize the database between clients

- Schema needs:

- TYPES: human-compiler communication

- generating Typescript types

- DATA AT REST, VALIDATION: program-to-database communication

- Encode and decode when reading and writing

- PARSING: program-to-program communication

- parsing external data

- USER INTERFACE: end-user-to-program communication

- user interface

- TYPES: human-compiler communication

- Serialize the schema to the database and synchronize over the network for run-time schema discovery

- Local-first is a set of ideals, but a general architecture fulfills the ideals

- An example: Unified schema in DXOS with Effect Schema

- Effect Schema overview

- Code examples of Schema in DXOS

- Schema definition

- Validations

- Errors

- Schema serialization and discovery

- Inversion of control with Composer drag-n-drop

- Challenges:

- Schema migration

- Schema serialization when validations consist of arbitrary code

- LLMs and Schema: recovering flexible schema interpretation

Feedback from Jonathan Edwards:

First of all, I’m not clear exactly what is the software stack you are proposing. Is there a relational database still involved or some new kind of database? If relational, then you need to explain how things will work better than all the prior attempts to use Object Relational Mappings as in Java and Rails. If not relational then exactly what is it and how is it better than things like Mongo and Firebase? People have tried and failed to do this before, and while it is certainly worth trying again you need to explain what is different this time. The best way to do this is to identify at least one of the failures of past approaches and show a new solution. That is what is needed to publish a research paper.

Sometimes there aren’t clear-cut new solutions and instead just a lot of little improvements that make life easier. That is generally referred to as “design”, and is harder to argue for in a paper. E.G. Rails is very nicely designed, but hard to boil down to a paper - demos work better for that.

It doesn't matter what kind of database-relational or k-v store. The software stack is to colocate the database with the client and keep the database synchronized across clients using an event-based